11/28/09

RotoCap and Microcontroller Applications

Good news. My thesis paper has been initially accepted by my committee. This has freed me to continue research in the fascinating world of human-computer interaction. The Arduino is an open-source microcontroller from Italy. It's cheap, well documented, and has numerous online communities each with their specific niches (diy-drones, music-production, art-installations). Above is my tinkering which will soon be connected to rotoCap to utilize its color-handling/vision functions.

9/15/09

How Color-Tracking Enhances Sony's PS3 Motion Controller

Sony's motion interface uses not only accelerometers like the Wii, but also uses the color emitted from the sphere to maintain tracking of different controllers. Since the sphere is a known dimension, Z-depth can also be extracted.

8/4/09

8/3/09

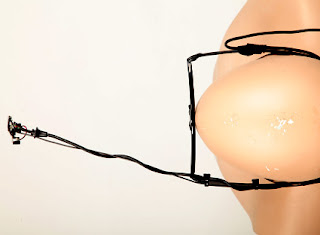

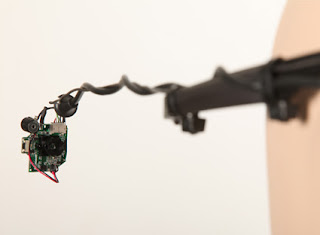

rotoCap Successfully Controls a Character

This is a test movie of my MFA thesis project, rotoCap. The person is repeating "Hello world" in different ways to drive the controllers of the 3D character ("Andy" by John Doublestein) only using three markers. The performance was shot on a scratch-built face-camera rig made from:

- discarded webcam

- used plastic 3D glasses

- steel bailing wire

- heat shrink wire wrap

5/14/09

Tracking fast movement and optimized centroid detection

I have updated the search method to handle motion blur and find centroids faster with greater accuracy. In this video I show how easy it is to setup targeting and simple key cleanup. The cleanup portion of the video will soon be automated and will be an option for the user to implement.

4/14/09

Arduino-based Motion Capture System

Another mocap project based on the Arduino microcontroller, from: http://blog.makezine.com/archive/2009/04/wireless_inertial_data_glove_using.html

Wireless Inertial Arduino Data Glove v0.3 from Noah Zerkin on Vimeo.

This is a quick vid of one of my later prototype glove units. The software is a proof-of-concept exercise. Regardless, you should be able to get the gist of what I'm trying to do. The thing that makes this special is how insanely inexpensive it was to build. The ultimate aim is to create a low-cost modular full-body interaction capture system for use in gaming, AR, and creative applications. The first commercial app I'm targeting for integration is Maya. After that, we'll look at integration with a game engine. I'm not sure what a glove/arm kit will cost, but it should be under $250 (Perhaps well under... we'll see... there are costs besides parts). Mass-production units would cost considerably less.

Wireless Inertial Arduino Data Glove v0.3 from Noah Zerkin on Vimeo.

This is a quick vid of one of my later prototype glove units. The software is a proof-of-concept exercise. Regardless, you should be able to get the gist of what I'm trying to do. The thing that makes this special is how insanely inexpensive it was to build. The ultimate aim is to create a low-cost modular full-body interaction capture system for use in gaming, AR, and creative applications. The first commercial app I'm targeting for integration is Maya. After that, we'll look at integration with a game engine. I'm not sure what a glove/arm kit will cost, but it should be under $250 (Perhaps well under... we'll see... there are costs besides parts). Mass-production units would cost considerably less.

3/25/09

Making rotoCap Data Useful

In order to be adaptable to each user's situation I have come up with a scheme to re-interpret data coming from rotoCap into a usable format. Motion data such as this is converted to keyable attributes which could be used to drive the user's own character rigs or anything possible via the connectionEditor. All this is done by essentially drawing the slider around the drone.

The image above shows the boundary box of the sphere's motion-extremes across the image surface. The dark triangles were some previous diagnostic graphics, ignore them. Scaling the green box redefines the parameter range of the drone (sphere) outputs by clamping the X and Y attributes. Additional number fields will be added to the drone's GUI section for North, South, East, West. Each field will define what the extreme value is for that direction or to ignore that parameter or clamp it to infinity. The user should be able to just plug in the extreme values from the control rig, blank fields are ignored:

Simulation

User Defined Drone Attributes

.85 would equal a slightly closed eye, or 85% open. The eye is parented to a null-dummy node which acts as a buffer between rotoCap and the user's rig just in case the user needs to remove portions or all of rotoCap from a scene which actually is the ultimate goal. rotoCap should work behind the curtains and leave as little impact to a scene beyond passing attributes in the form of a bare-bones node network.

The image above shows the boundary box of the sphere's motion-extremes across the image surface. The dark triangles were some previous diagnostic graphics, ignore them. Scaling the green box redefines the parameter range of the drone (sphere) outputs by clamping the X and Y attributes. Additional number fields will be added to the drone's GUI section for North, South, East, West. Each field will define what the extreme value is for that direction or to ignore that parameter or clamp it to infinity. The user should be able to just plug in the extreme values from the control rig, blank fields are ignored:

- yourFaceRig_eyeControlSlider.minY = 0.0 = drone.South

- yourFaceRig_eyeControlSlider.maxY = 1.0 = drone.North

Simulation

User Defined Drone Attributes

- Target Name = eyeControlSlider

- North = 1.0

- South = 0.0

- West = SKIP //ignore

- East = SKIP //ignore

.85 would equal a slightly closed eye, or 85% open. The eye is parented to a null-dummy node which acts as a buffer between rotoCap and the user's rig just in case the user needs to remove portions or all of rotoCap from a scene which actually is the ultimate goal. rotoCap should work behind the curtains and leave as little impact to a scene beyond passing attributes in the form of a bare-bones node network.

3/2/09

rotoCap Progress (Updated)

The past weekend has yielded many successes. After finally fixing a bug that wouldn't advance the frame properly I've fully re-engineered the core tracking engine and even ran some benchmark tests which will be posted shortly (updated, see below). The movie above shows the raw output, single pass with "Simplify Curves" command in from the Graph Editor, and a final hand-tweaked version. The raw output creates lots of key frames that aren't all necessary so by keeping the important key frames, this makes it much easier for the final manual cleanup due to the reduced key set.

Test Results & Observations

Environment

- 50 JPG image sequence

- 1 Drone sampled (Pink)

- timerX MEL function for timing

- Tolerance: (0.0 to 1.0) range of RGB value rotoCap accepts as close enough to desired color.

- Step: (0.0 to 1.0) distance to next sampled UV coordinate

- # of Keys: how many keyframes rotoCap created for a drone

- Time Elapsed: (seconds) starts when "Launch Drones" is pressed, ends after last drone is tested

- Tolerance .2

- Step .05

- # of Keys 7

- Time Elapsed = 101.47

Almost all of the keys occurred at the beginning while the target color was stationary. Tracking was not detecting motion over time. I suspected the step size was too large and rotoCap just couldn't sample enough UV's to find the drone.

Test 2: Smaller Step Size/Centering Off

- Tolerance .2

- Step .025

- # of Keys 16

- Time Elapsed = 1217.69

Reducing the step turned out to fix the sparse key issue. rotoCap still seemed to favor the edge of the color mass instead of centered which makes sense since this was run with self-centering turned off.

Test 3: Centering On

- Tolerance .2

- Step .025

- # of Keys 35 (simplified: 17)

- Time Elapsed = 71.86

The time difference is substantial due to the centering sub-process passing the centered UV coordinates to the next cycle of the colorSeek process. The key generation also went up significantly. Running a simplify curves on the XY curves in the Graph Editor essentially deletes the unnecessary stationary keyframes. I'm very happy how well the script is working now.

2/28/09

Alternate Search Modes

While working on rotoCap today I thought a nice feature would be allowing the user to manually launch rotoCap on a per-frame basis instead of a run of the whole image sequence. Additionally I am adding a flag to the keying sub-process whether or not to overwrite existing keyframes on a drone. If existing keyframes are found then the engine can use them as initial search coordinates, saving time by not having to start at the origin of a UV plane during the initial UV survey.

Subscribe to:

Posts (Atom)